The History of Artificial Intelligence: From Alan Turing to the Present Day.

The journey of Artificial Intelligence (AI) is a testament to humanity's enduring quest to replicate and augment its own cognitive abilities, a saga spanning from theoretical musings to the sophisticated systems that permeate modern life. This profound evolution, marked by periods of fervent optimism and challenging skepticism, has fundamentally reshaped our understanding of intelligence itself.

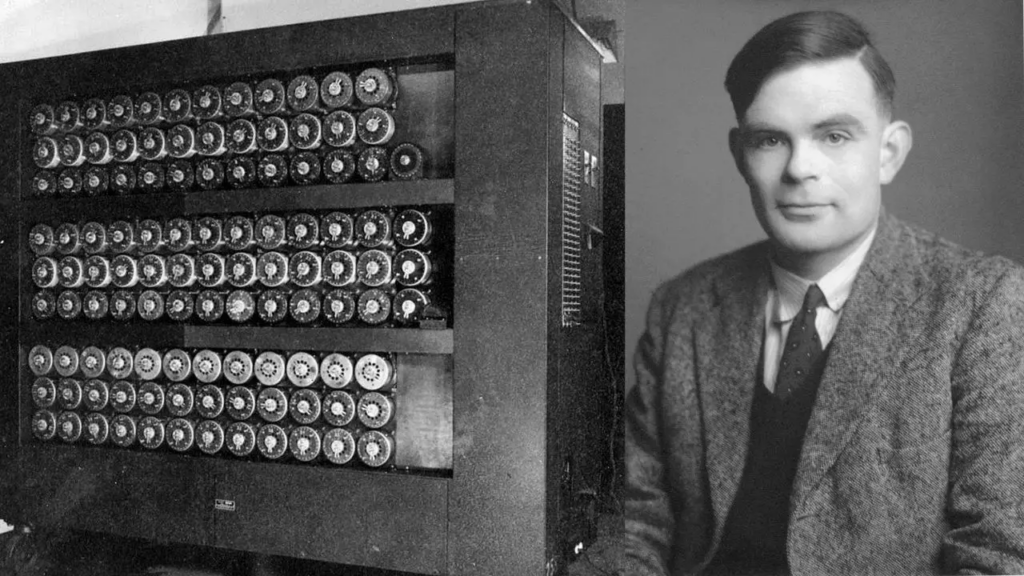

Early Foundations and Turing's VisionThe conceptual bedrock of artificial intelligence was laid long before the advent of electronic computers. In 1935, British logician and computer pioneer Alan Mathison Turing described an abstract computing machine, now known as the universal Turing machine, which could perform any computation by manipulating symbols on a strip of tape. This theoretical construct provided the logical framework for all modern computers and underscored the principle that machines could operate on, and even modify, their own programs [1]. His seminal 1950 paper, "Computing Machinery and Intelligence," famously posed the question, "Can machines think?" and introduced the "Imitation Game," later known as the Turing Test, as a criterion for machine intelligence. The test proposed that if a human interrogator could not distinguish a machine from a human through text-based conversation, the machine could be considered intelligent [2][3]. Turing also foresaw the importance of machine learning, suggesting that a "thinking machine" might begin with the "blank mind of an infant" and learn over time, even discussing early ideas akin to neural networks [2]. Preceding Turing's paper, Walter Pitts and Warren McCulloch in 1943 designed the first mathematical model of artificial neurons, laying a crucial groundwork for neural network research, further expanded by Donald Hebb's theories on neural interaction in 1949 [4][5].

The Birth of AI: Dartmouth and Early Programs (1950s-1960s)The formal birth of Artificial Intelligence as a field is widely attributed to the Dartmouth Summer Research Project on Artificial Intelligence in 1956. Organized by John McCarthy, a young assistant professor of mathematics at Dartmouth College, alongside Marvin Minsky, Nathaniel Rochester, and Claude Shannon, the workshop brought together leading minds with the ambitious proposal that "every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it" [4][6]. It was at this seminal event that the term "Artificial Intelligence" was coined [4][7]. The enthusiasm of this era quickly led to pioneering developments. In 1951, Christopher Strachey developed one of the earliest successful AI programs—a checkers program [1]. Arthur Samuel followed in 1952 with another checkers program for the IBM 701, which was notable for its ability to learn independently [1][7]. Samuel also coined the term "machine learning" in 1959 [7][8]. John McCarthy developed Lisp in 1958, the first programming language specifically designed for AI research [7][9]. Joseph Weizenbaum's ELIZA, created between 1964 and 1967, was an early natural language processing program that simulated conversation, most famously as a Rogerian psychotherapist. Despite its simple pattern-matching rules, ELIZA remarkably gave users the illusion of understanding, highlighting the psychological impact of human-machine interaction [9][10]. Other notable early programs included the Logic Theorist (1956) and the General Problem Solver (1957) by Newell, Shaw, and Simon, which aimed to mimic human problem-solving strategies [11].

AI Winters and the Expert Systems Era (1970s-1980s)The initial optimism of AI's early decades eventually gave way to periods of disillusionment, famously known as "AI winters." These downturns were characterized by reduced funding, waning public interest, and a general skepticism about the field's prospects [12][13]. One significant factor was the over-ambitious promises made by researchers, which often outstripped the technological capabilities of the time [13][14]. A critical blow came in 1973 with the Lighthill Report in the UK, which highlighted the limited practical applications of AI and led to substantial cuts in government funding for AI research [7][13]. Despite these challenges, the 1980s saw a temporary resurgence driven by the rise of "expert systems." These systems, among the first truly successful forms of AI software, were designed to solve complex problems by reasoning through bodies of knowledge represented as "if-then" rules [15][16]. They comprised a knowledge base (facts and rules) and an inference engine to apply these rules [15]. Systems like Dendral, created in the 1980s, showcased the potential of rule-based AI in specific domains [4][17]. Expert systems proliferated, particularly in business and medical applications, leading to a brief "AI boom" [7][15]. However, their limitations—such as difficulty in scaling beyond narrow domains, brittleness when encountering situations outside their programmed knowledge, and the challenge of knowledge acquisition—ultimately led to a second, more severe AI winter in the late 1980s and early 1990s [13][18].

The Resurgence: Machine Learning and Neural Networks (1990s-2000s)The landscape of AI began to shift dramatically in the 1990s and 2000s, driven by several key factors: the exponential growth in computational power (often attributed to Moore's Law), the increasing availability of vast datasets, and significant algorithmic advancements [19][20]. A pivotal moment was the resurgence of neural networks, largely fueled by the refinement of the backpropagation algorithm in 1986, which allowed these networks to learn from data more effectively [4]. This period marked a move away from purely symbolic AI towards statistical approaches. In 1997, IBM's Deep Blue famously defeated world chess champion Garry Kasparov, a monumental achievement that demonstrated AI's growing analytical and strategic prowess [4][21]. While Deep Blue relied on brute-force search combined with expert knowledge, it signaled AI's capability to conquer complex human-dominated tasks. The early 2000s saw further foundational work, with Geoffrey Hinton's contributions in 2006 propelling deep learning into the spotlight, setting the stage for its later explosion [4]. In 2011, IBM Watson's victory on the game show "Jeopardy!" showcased AI's advanced natural language processing and question-answering abilities, demonstrating a significant leap in comprehending and processing human language [4].

The Deep Learning Revolution and Present Day (2010s-Present)The 2010s ushered in the "deep learning revolution," a period of unprecedented acceleration in AI capabilities. A landmark event was the 2012 ImageNet competition, where AlexNet, a deep convolutional neural network, drastically reduced error rates in image recognition, proving that AI could rival human performance in visual tasks [4][22]. This breakthrough ignited widespread interest in deep learning across various domains. In 2014, Ian Goodfellow and his team introduced Generative Adversarial Networks (GANs), a revolutionary tool for generating realistic data, fostering creativity in the AI space [4][9]. The world watched in awe in 2016 when DeepMind's AlphaGo, utilizing deep reinforcement learning, defeated Lee Sedol, one of the world's best Go players. Unlike previous game-playing AIs, AlphaGo learned to value decisions rather than relying solely on brute-force, demonstrating AI's ability to master strategy and intuition [20][22].

The current era is dominated by Large Language Models (LLMs) and generative AI. OpenAI's GPT-3, launched in 2020, opened new avenues for human-machine interaction, followed by DALL-E in 2021, capable of generating images from text [4]. The release of ChatGPT-4 and Google's Bard in 2023, along with Microsoft's integration of generative AI into Bing, have brought sophisticated conversational AI to the forefront, transforming search experiences and content creation [4].

However, this rapid advancement has also brought critical ethical considerations to the forefront. Issues such as algorithmic bias and discrimination, transparency and accountability in AI decision-making, privacy concerns related to vast data collection, and the potential for job displacement are actively debated [23][24]. The development of autonomous weapons also raises profound moral questions [23]. As of 2026, AI is deeply embedded in various sectors, with 77% of devices using some form of AI and 83% of companies prioritizing AI in their business strategies [25]. The field is moving towards "agentic AI" and "sovereign AI," where AI systems act more autonomously and countries aim for strategic independence in AI development [26][27]. The future promises further integration and transformation, necessitating careful navigation of its immense potential and inherent challenges.

The history of AI is a dynamic narrative of human ingenuity, persistent scientific inquiry, and the cyclical nature of technological progress. From Turing's foundational concepts to the complex deep learning models of today, AI has evolved from a theoretical dream to a transformative force, continually pushing the boundaries of what machines can achieve and prompting humanity to reflect on the very essence of intelligence.